The past two decades of innovations in embedded systems, robotics, industrial automation and autonomous vehicles all have one thing in common. They all depend on allowing machines to “see” the world the way we do: in three dimensions.

The human eye and brain have evolved to allow us to interact naturally with the world around us, so we tend to take our 3-dimensional reality for granted. However, allowing machines to perceive the world the way we do requires some kind of depth-sensing technology.

Device Types

Time-of-Flight and LiDAR are examples of several commonly used depth-sensing technologies. There is no single “one size fits all” solution that’s perfect for every application, and in some cases it’s useful to combine multiple approaches to depth-sensing in order to combine the advantages that each approach offers.

Structured Light Cameras

Structured light cameras use a projector to illuminate the scene with a known pattern of stripes, bars, or even points of light. By observing distortions in the reflected pattern, structured light cameras can compute the depth and contours of objects in the scene.

Structured light cameras are similar to stereo cameras, in that they depend on the baseline, or offset between the light projector and the camera lens in order to triangulate the depth of each point of the reflected pattern in the scene.

Some structured light cameras will rapidly scan the surfaces in the scene with light patterns that are phase-shifted in order to more accurately compute contours that might not be apparent with a single scan. Some structured light 3D scanner products combine a projector with stereo cameras for added precision.

Structured light cameras produce very accurate depth data, producing depth maps with a precision of up to 100 micrometers, but are typically only useful at very short operating ranges and rapidly lose precision at longer ranges.

The resulting depth maps are also computationally expensive and take longer to produce relative to other depth-sensing technologies. Because of this, they are not suited for real-time applications, and work best with a stationary subject.

Ambient light can also interfere with the projected patterns, so structured light cameras are generally only used indoors where the lighting conditions can be controlled.

Stereo Depth Cameras

Stereo Depth cameras work in the same way as human binocular vision: by using two cameras set a few centimeters apart. Software in the camera’s processing unit detects the same features in each sensor. Each feature will have a slightly different position in each sensor, and the software can use the resulting offset to calculate the depth of that point by using triangulation.

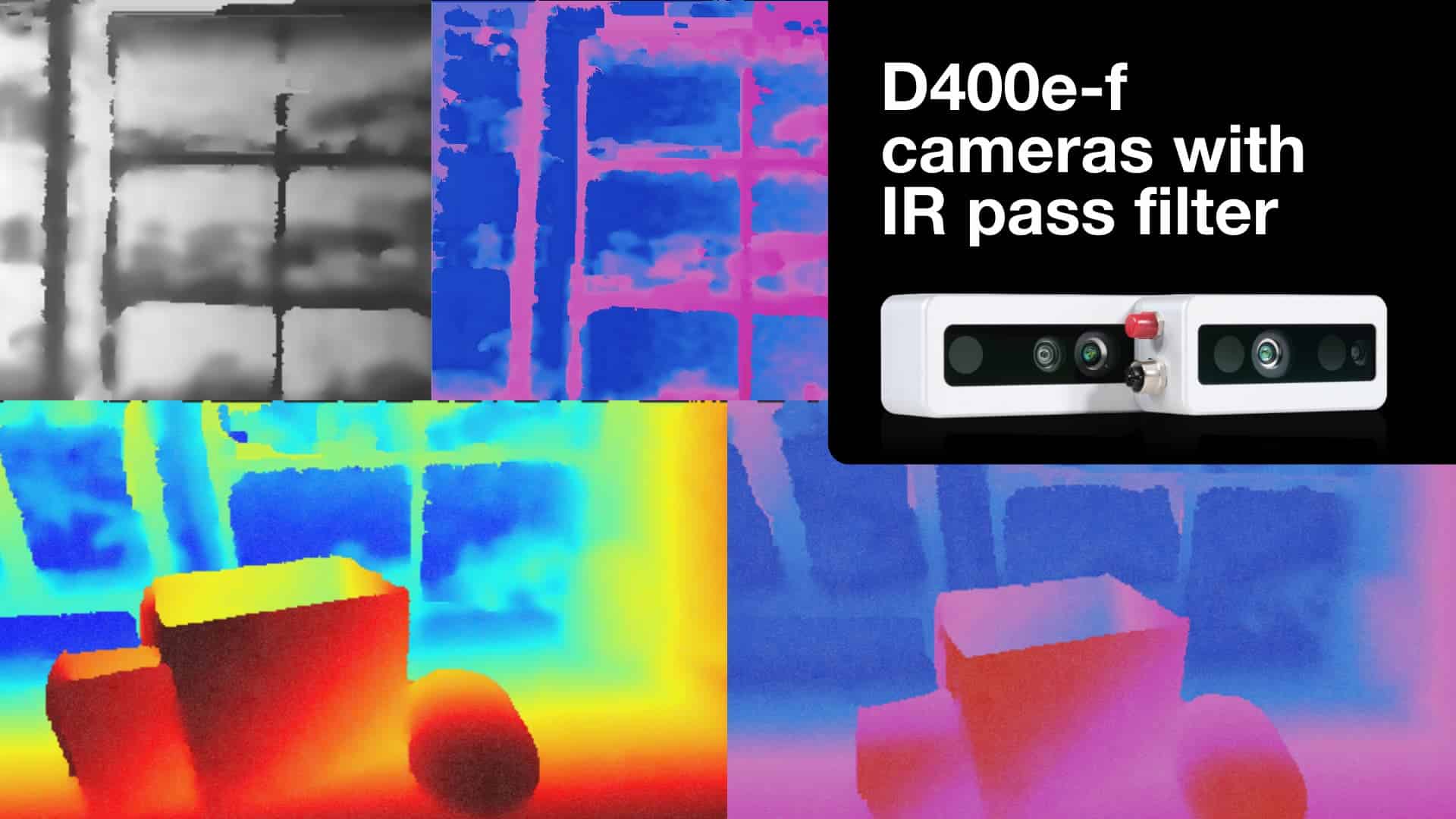

Most stereo depth cameras also employ active sensing and include a patterned light projector to help find corresponding points on otherwise flat or featureless surfaces.

These cameras will typically use near-infra red (NIR) sensors that can see the projected infra-red pattern in addition to visible light. Some depth cameras, like the Intel RealSense™ camera, also include an RGB camera sensor in order to overlay color information on the resulting depth map.

While detecting and correlating features in both sensor images can be computationally expensive, stereo depth cameras are quite effective at providing real-time depth information in a wide variety of lighting conditions. However, they do have a limited effective operating range, which varies depending on the baseline – the separation between the two main image sensors – and the resolution of the image sensors. This is because as objects get more distant from the camera, the separation between corresponding features becomes too small for the sensors to resolve.

Stereo depth cameras are typically effective at ranges of up to 6 meters from the camera.

LiDAR Sensors

LiDAR (Light Detection and Ranging) systems use a focused laser emitter that scans back and forth to project a raster pattern of points of light on the scene they are recording.

Each time a pulse of light is projected from the LiDAR system, the sensor in the system records the interval between the time the pulse of light is emitted, and the time it is reflected back to the sensor. This interval allows the system to compute the distance to whatever object has reflected the light to the sensor, based on the speed of light.

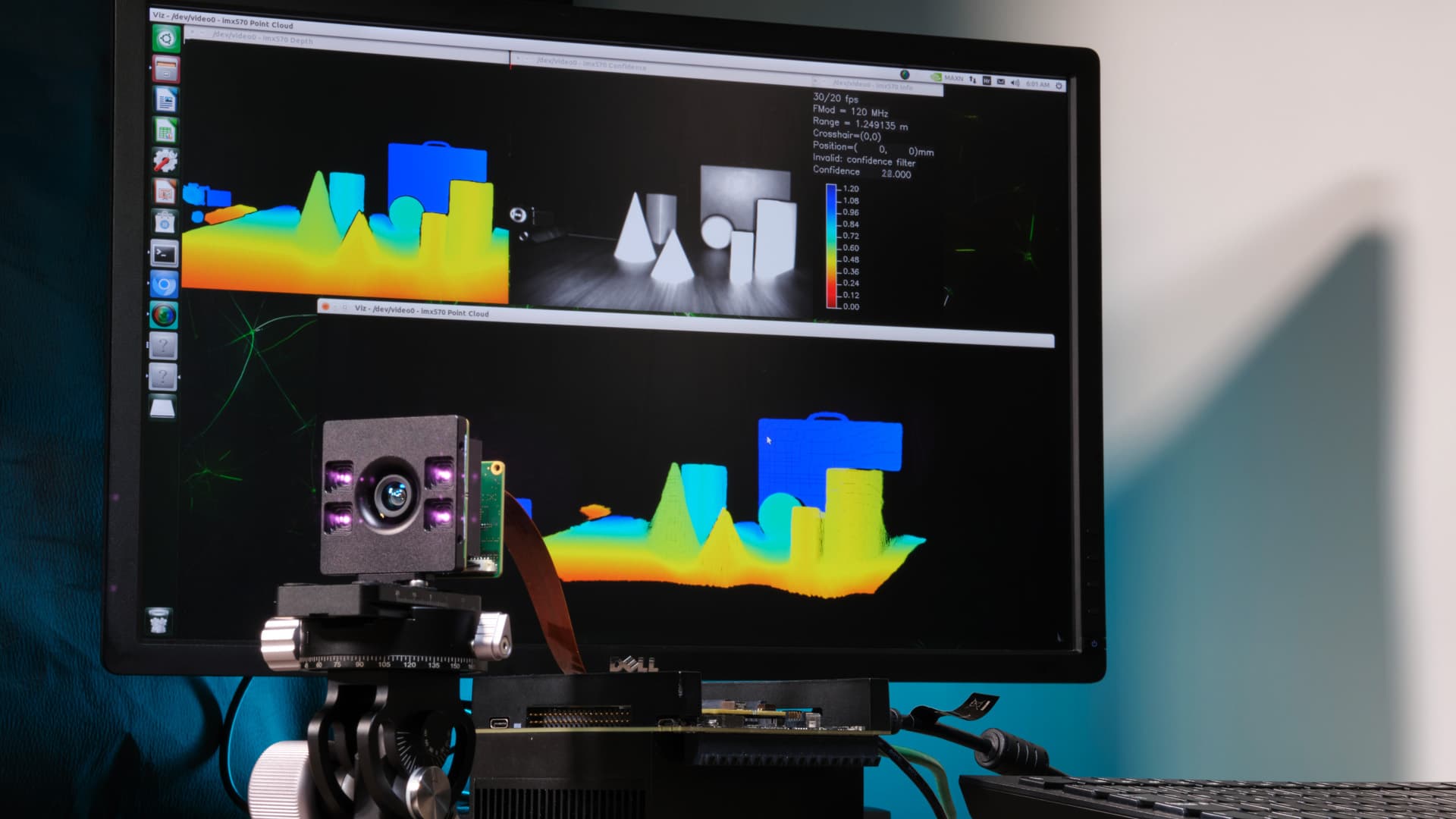

LiDAR sensors can scan the scene to produce a single ‘frame’ of data based on the distance measured and the direction the beam was directed. These ‘frames’ can be anywhere from a few hundred to many thousands of individual points. Each time the LiDAR system completes a scan, it generates a “point cloud” based on the position of these points. LiDAR systems can also be used in a continuously streaming mode. This data can be used to build up a 3D map of the area being recorded.

There is a great deal of variation in LiDAR systems, depending on the intended application. Because they used a collimated and focused laser beam to scan the target area, LiDAR sensors can be effective at extremely long ranges of up to several hundred meters, but small, low-power LiDAR sensors are also used for depth-sensing at short ranges. They are useful in a wide variety of lighting conditions, though like all active sensing technologies, they are sensitive to ambient light when being operated outdoors.

LiDAR sensors typically use infra-red lasers in one of two wavelengths: 905 nanometers and 1550 nanometers. The shorter wavelength lasers are less likely to be absorbed by water in the atmosphere and are more useful for long-range surveying, while the longer wavelength infra-red lasers are more likely to be eye-safe applications like allowing robots to navigate around humans.

ToF Camera Systems

Another active depth-sensing technology that is widely used is Time-of-Flight cameras. There are two distinct approaches used in Time-of-Flight depth-sensing systems, each with their specific advantages for a given application: Direct Time-of-Flight Cameras (sometimes called “dToF” cameras), and Indirect Time-of-Flight, or iToF cameras.

Direct Time-of-Flight (dToF)

Like LiDAR sensors, direct ToF cameras work by scanning the scene with pulses of invisible infra-red laser light, and then observing the light that is reflected by objects in the scene. The distance to each point can be computed based on the time it takes for a pulse of light to travel from the emitter to an object in the scene, and then back again.

Direct ToF cameras use a special kind of sensing pixel called Single Photon Avalanche Diode (SPAD) pixels that sense the sudden spike in photons when a pulse of light is reflected back to them, and record that interval. These sensing elements are comparatively large, and are read out in groups as the laser scan progresses.

Because of the way these sensors work, direct ToF cameras tend to be fairly low-resolution. However, direct ToF cameras are compact, relatively inexpensive, and useful for a wide range of applications where high resolution or real-time performance aren’t required.

Indirect Time-of-Flight (iToF)

Indirect Time-of-Flight or “iToF” cameras use diffuse infra-red laser light from one or more emitters to illuminate the entire scene in a series of modulated laser pulses, or flashes. The light is continuously modulated by pulsing the laser emitters at a high frequency.

Rather than directly measuring the interval between each pulse of light and the time when it is reflected back to the camera, iToF cameras record and compare the phase shift of waveform as recorded in each pixel of the sensor. By comparing how much the waveform is phase-shifted in each pixel, it’s possible to compute the distance to the corresponding point in the scene.

Indirect ToF sensing technology makes it possible to determine the distance to all points in the scene in a single shot.

Advantages and Disadvantages of ToF

All depth-sensing technologies have their attendant advantages and disadvantages. There is no “one-size-fits-all” technology that is perfectly suited for every application. However, Time-of-Flight cameras offer advantages that make them very useful in the right context.

Advantages of ToF Cameras

ToF cameras typically have no moving parts. This is true for all indirect ToF cameras, which use diffuse laser illumination, though some direct Timer-of Flight Cameras do use MEMS (Micro Electro-Mechanical Systems) chips or other moving parts to direct the laser.

All ToF cameras are compact, lightweight, and relatively inexpensive. Depending on the power required for their laser emitters, they can be made small enough to embed in very small devices, including smart phones.

All ToF cameras can be operated in very low light conditions, or even complete darkness, since they provide their own illumination. The accuracy of ToF cameras is superior to any other depth sensing technology except for Structured Light Cameras, and can provide accuracy to a range of 1mm to 1 cm, depending on the operating range of the camera.

Indirect ToF cameras in particular provide very high resolution, high-fidelity depth information at up to 640×480 pixels (VGA resolution).

Because they scan the entire scene in a single frame, iToF cameras also operate very quickly, providing depth-sensing data at up to 60 frames per second. Because of this, iToF cameras are very useful for a wide variety of high speed or real-time applications.

ToF cameras are also relatively inexpensive to build and procure when compared to other depth-sensing technologies like Structured Light Cameras and LiDAR sensors.

Disadvantages of ToF Cameras

ToF cameras do have some disadvantages. In brightly-lit situations or outdoors, the light from the laser emitters can be washed out by ambient light. Indirect ToF cameras in particular can also be confused by highly reflective surfaces or retroreflective materials, and all ToF cameras can be confused by encroaching light from other ToF cameras operating in the same field of view.

For this reason, other depth sensing technologies, like Stereo Depth Cameras may be more effective for applications that require operating outdoors, or in situations where you might want to have multiple depth cameras operating in the same area.

However, as ToF technology continues to evolve, it is becoming more robust and flexible. Sony Semiconductor Solutions recently released the IMX570 ToF Sensor, which features a “pixel drive” processing circuit to reduce the effects of unwanted ambient light. This improves the accuracy and effective operating range of the sensor in highly illuminated environments or outdoors under bright sunshine.

Applications of Time-of-Flight Technology

Like any depth-sensing Technology, Time-of-Flight cameras have some limitations and drawbacks. However, their flexibility and ease of deployment make them a good fit for a variety of applications.

ToF cameras are used for depth sensing in industrial and robotics applications, including providing machine vision for pick-and-place robots; object recognition on assembly lines; object classification for robotics, and for providing navigation capabilities for mobile robots or Autonomous Guided Vehicles (AGVs). A good example of this is as a guidance system for autonomous forklifts in an automated warehouse.

Because ToF cameras provide their own illumination, they can be used in a wide variety of environments and lighting conditions – both indoors and outdoors.

Logistics and Warehouse Automation

One of the advantages of ToF cameras is that they produce high-resolution depth data at high speeds, operating at frame rates of up to 60fps. This means that a single ToF camera mounted over a conveyor belt, for example, can accurately sort packages by size – providing precise measurements in all three dimensions – just as quickly as the conveyor belt can be operated.

For this reason, ToF cameras are enjoying widespread adoption in logistics and warehouse automation applications, where they are used for sorting packages, or to allow robots to quickly and accurately handle, stack and palletize packages.

Mobility and Automotive

ToF cameras are finding their way into mobility and transport, and automotive applications – both inside and outside the vehicle. Because of the precise, real-time depth information they provide, ToF cameras are useful for providing situational awareness around the vehicle.

ToF cameras are used to provide navigation and situational awareness capabilities for self-driving cars, or as a means for providing accurate range data for automotive assistance capabilities like automatic parking assist features.

ToF cameras are also found inside vehicles, where they are applied for attention tracking, (to make sure the vehicle operator is watching the road), gesture recognition, and passenger surveillance, (to make sure everyone is safely in their seats).

Retail Automation

New applications for ToF cameras are emerging in the burgeoning retail automation space. Because of their ability to perceive depth, ToF cameras aren’t confused when people are closely packed together, so they are useful for applications like people counting, tracking and localization, and for flow analysis (to observe how people move through a retail space).

ToF cameras are also used to provide functionality for seamless checkout systems because of their usefulness in recognizing gestures, and whether or not people are holding objects.

Entertainment and Gaming

An emerging market for the application of ToF camera systems is entertainment. ToF cameras can be made sufficiently inexpensive and compact to be included in a wide array of consumer devices, including mobile phones. Because they provide their own illumination, ToF cameras are already being incorporated into cell phones to provide a focus assist system for visible light cameras in low light situations.

Because they record depth information, ToF cameras are also very useful for gesture recognition, since they are not confused if the subject’s hands pass in front of their bodies. Next-generation video game consoles and virtual reality systems use ToF cameras to track players’ hand and body positions in order to provide a more immersive in-game experience.

Comparison of Depth Sensing Technologies

The table below compares advantages and disadvantages of the depth-sensing technologies discussed in this document:

| Property | Structured Light | Stereo Vision | LiDAR | dToF | iToF |

|---|---|---|---|---|---|

| Principle | Observes distortions in projected pattern | Compares features in two stereo images | Measures transit time of reflected light from an object | Measures transit time of reflected light from an object | Measures phase shift of modulated light pulses |

| Software Complexity | Very high | High | Low | Low | Medium |

| Relative Cost | High | Low | Varies | Low | Medium |

| Accuracy | µm - mm | cm | Depends on range | mm-cm | mm-cm |

| Operating Range | Low, but scalable | ~6m | Very scalable | Scalable | scalable |

| Low Light | Good | Weak | Good | Good | Good |

| Outdoor | Weak | Good | Good | Fair | Fair |

| Scan Speed | Slow | Medium | Slow | Fast | Very Fast |

| Compactness | Medium | Low | Low | High | Medium |

| Power Consumption | High | Scalable - Low | Scalable - High | Medium | Scalable - Medium |

Evaluate iToF for Your Application

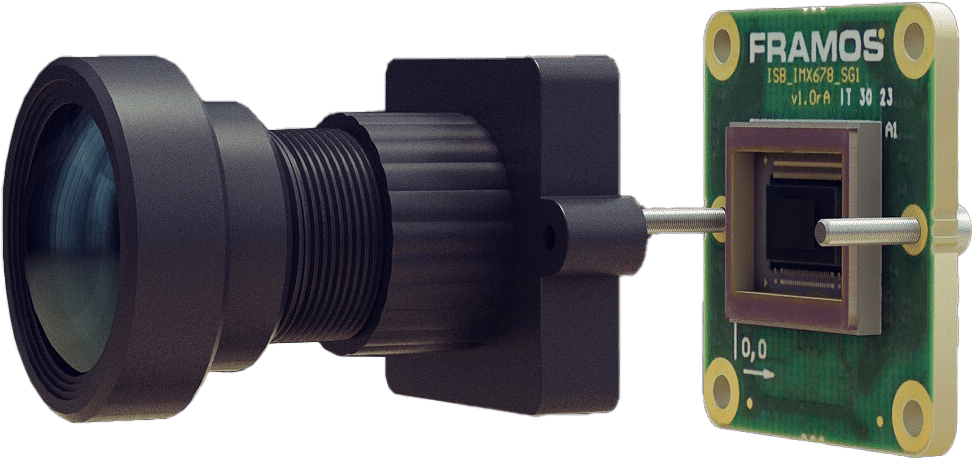

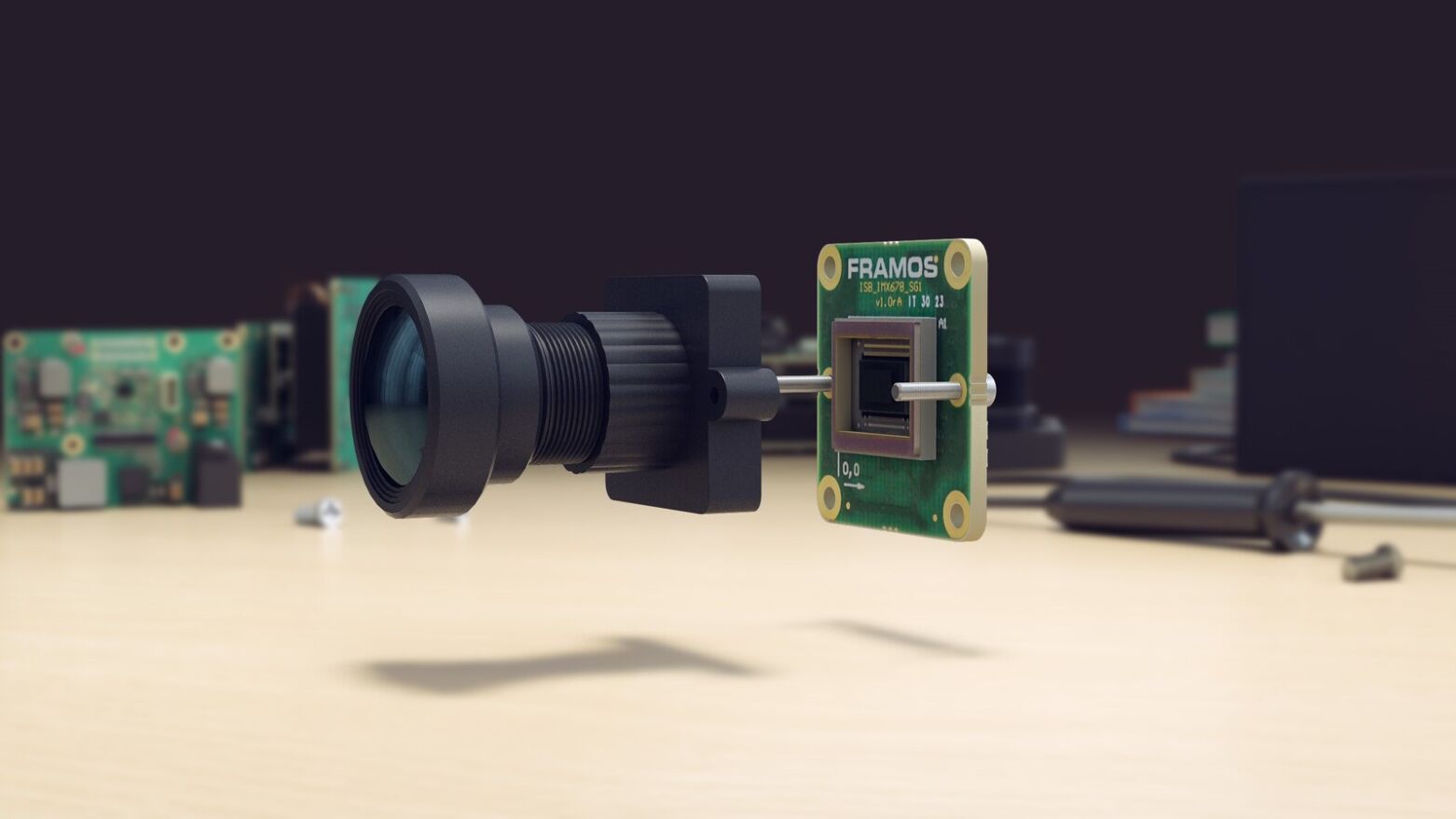

FRAMOS has introduced a ToF camera development kit for vision system engineers who are investigating Time-of-Flight technology, or who are working to develop ToF cameras for machine vision applications. The FSM-IMX570 development kit provides vision system engineers with a simple, coherent framework for quickly developing a working prototype of an indirect Time-of-Flight (iToF) camera system based on Sony’s industry-leading iToF technology.

If you are interested in evaluating Time-of-Flight technology for a new application, or have a camera development project in mind, the FSM-IMX570 development kit can provide you with an easy way to experiment with the technology or to develop your own prototype camera system. View the development kit product information page.

NEW