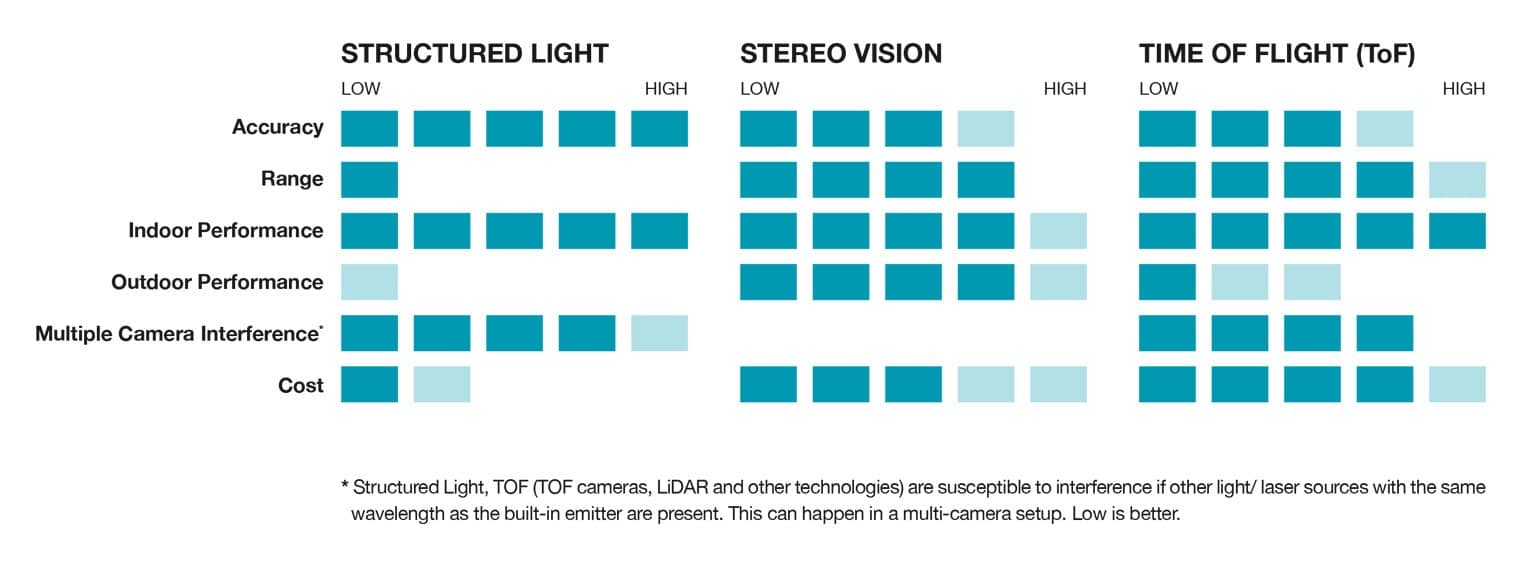

3D depth sensing technologies enable devices and machines to sense their surroundings. Recently, depth measurement and three-dimensional perception have gained importance in many industries and applications. These applications include industrial environments with respect to process optimization and automation, robotics, and autonomous vehicles. There are many different physical and technological approaches to generate depth information. Below is an overview of the most important methods to help you understand how they compare to one another, and how to better assess which method works best for your application.

3D Depth Sensing

WHITEPAPER "3D Vision for the Industry"

Working Principles Stereo Vision

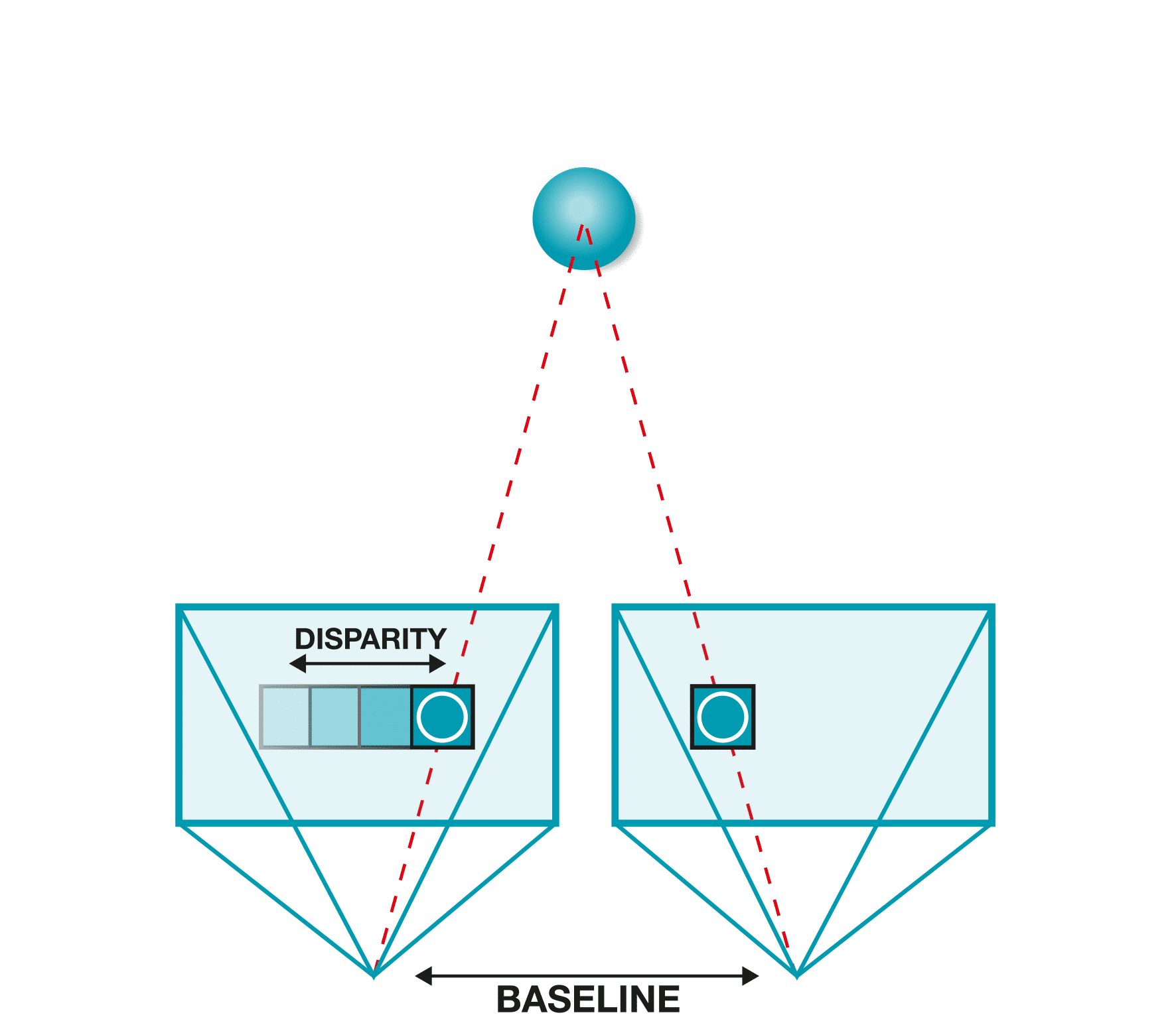

In principle, stereo vision mimics human vision. The technology determines quantifiable depth perception by recording object with two cameras, located on a common baseline, with a fixed distance between the two lens centers. In this way, each camera captures the scene at a slightly different angle. The closer an object, the more its features are shifted laterally apart in the two images. This shift is referred to as disparity. A depth map is calculated with the disparity value between the two images. Passive systems use available ambient light or artificial lighting to illuminate the object. Active systems employ a light source projecting random patterns onto the visible scene, adding features that improve the recognition of such in the two captured images. Most commonly infrared laser projectors with pseudo-random patterns are used, but also structured light is an option. Benefits of stereo vision technology (active) are the robustness in changing lighting conditions and easy multi-camera setup, as the cameras do not interfere with one another. Stereo depth cameras come in all price ranges, depending on the required accuracy level and range.

Stereo Vision

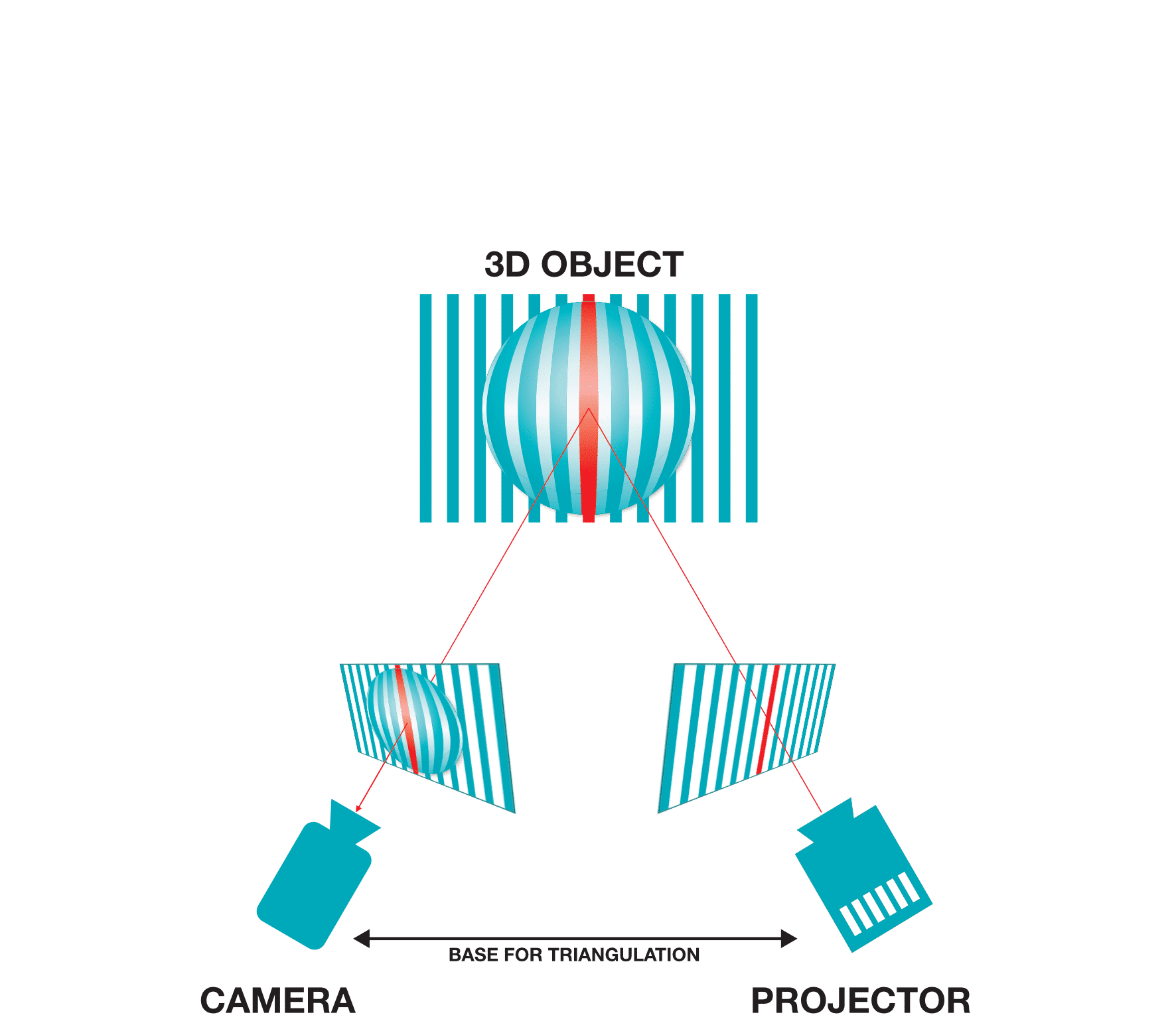

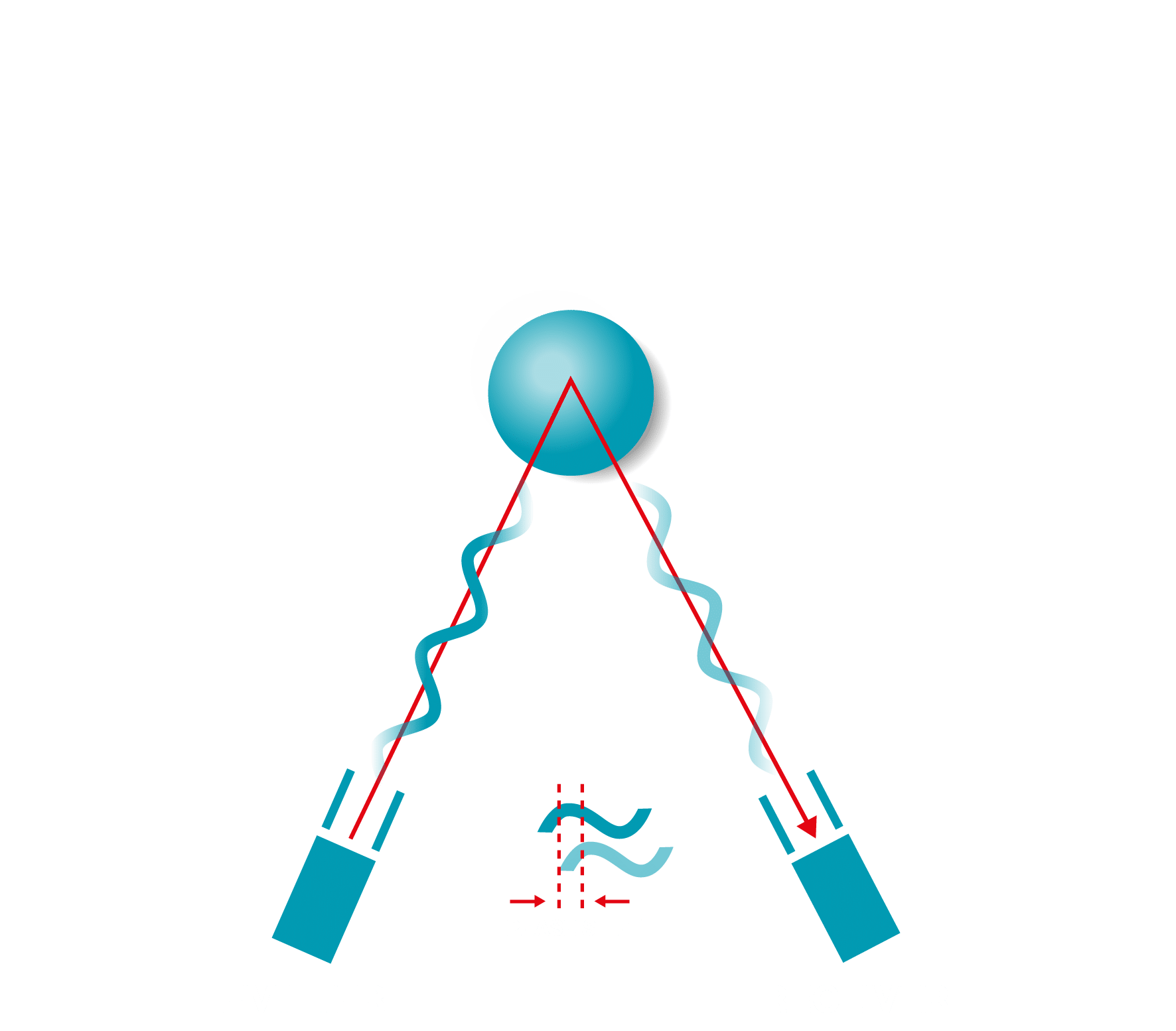

Working Principle Structured Light

STRUCTURED LIGHT

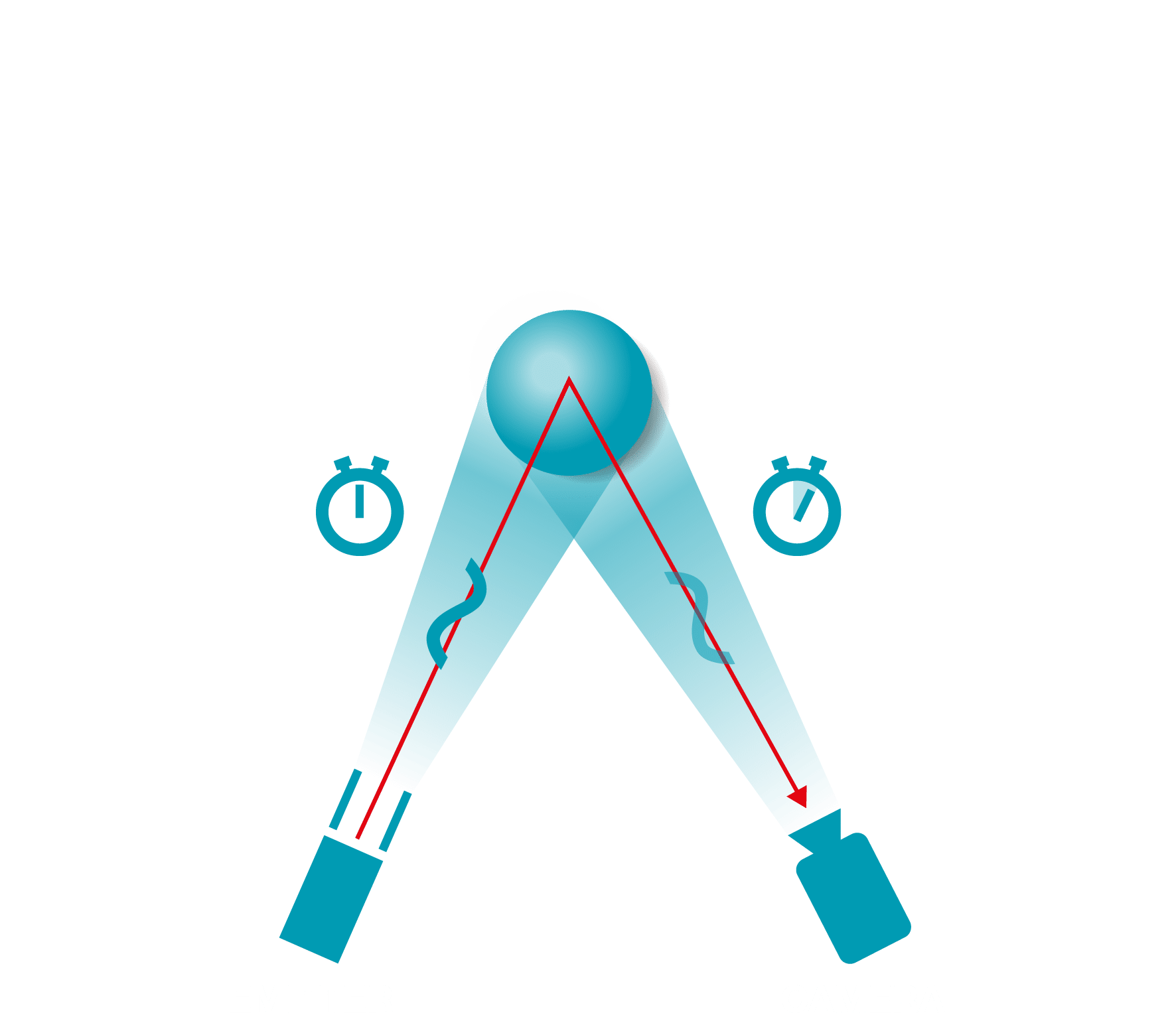

Working Principle Time Of Flight

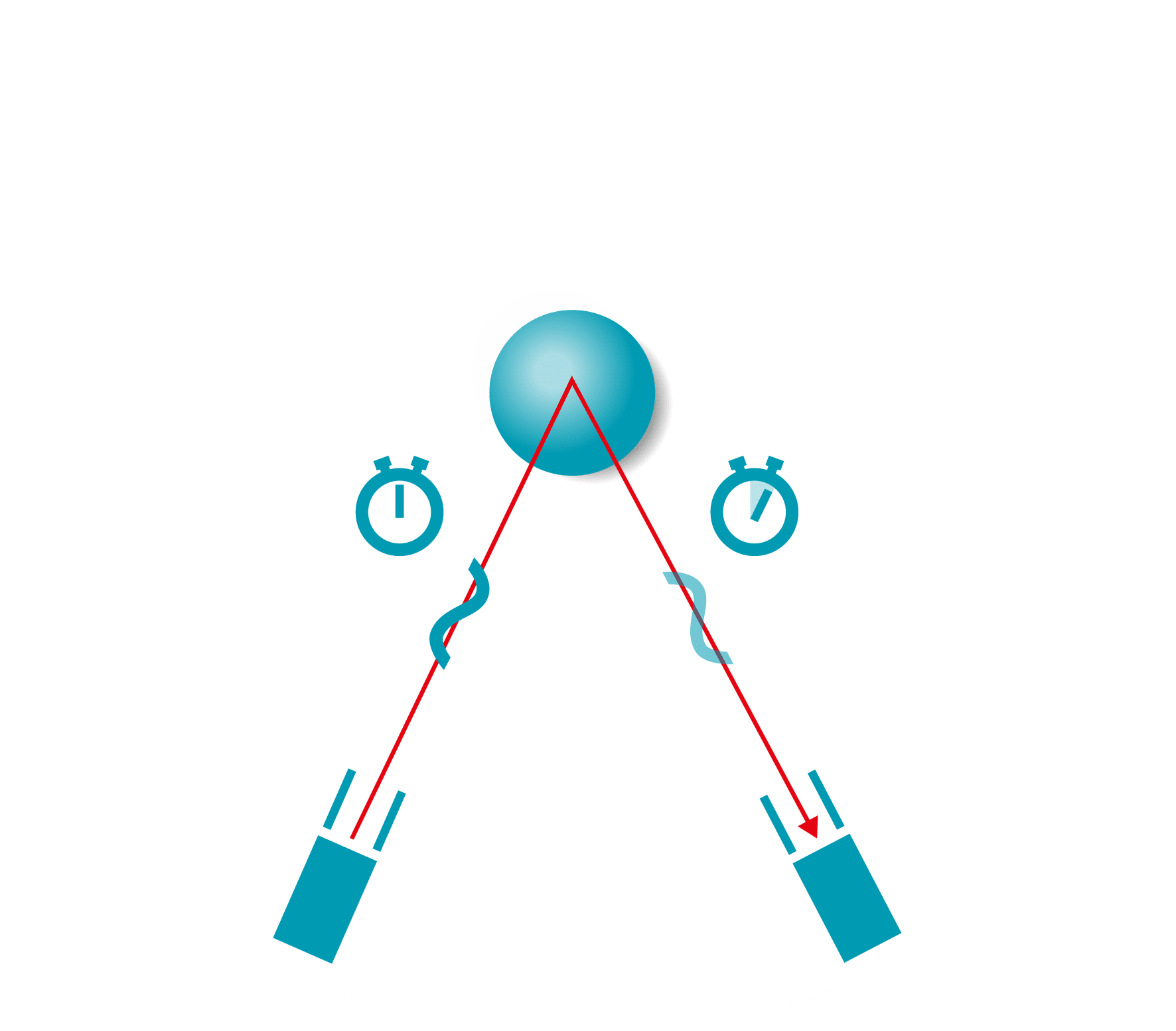

In computer vision, Time of Flight (ToF) refers to the principle of measuring the time of light to travel a certain distance. By knowing the speed of light, the distance between emitter and receiver (usually combined in a single device) can be calculated, as the required time is directly proportional to the distance. The light is usually emitted by LED or laser in the infrared spectrum. Several different implementations are possible; commonly flash based Time of Flight cameras are distinguished from the scanning-based light detecting and ranging (LiDAR), direct ToF from indirect ToF systems.

Like structured light, cameras leveraging the ToF principle are susceptible to interferences from other cameras or external light sources that emit in the same wavelength. For multicamera setups, this can be resolved by synchronization of the cameras. Overall benefits are the high accuracy, independence of external light sources and being able to retrieve depth information from surfaces with little to no textures.

DIRECT TIME OF FLIGHT SCANNING

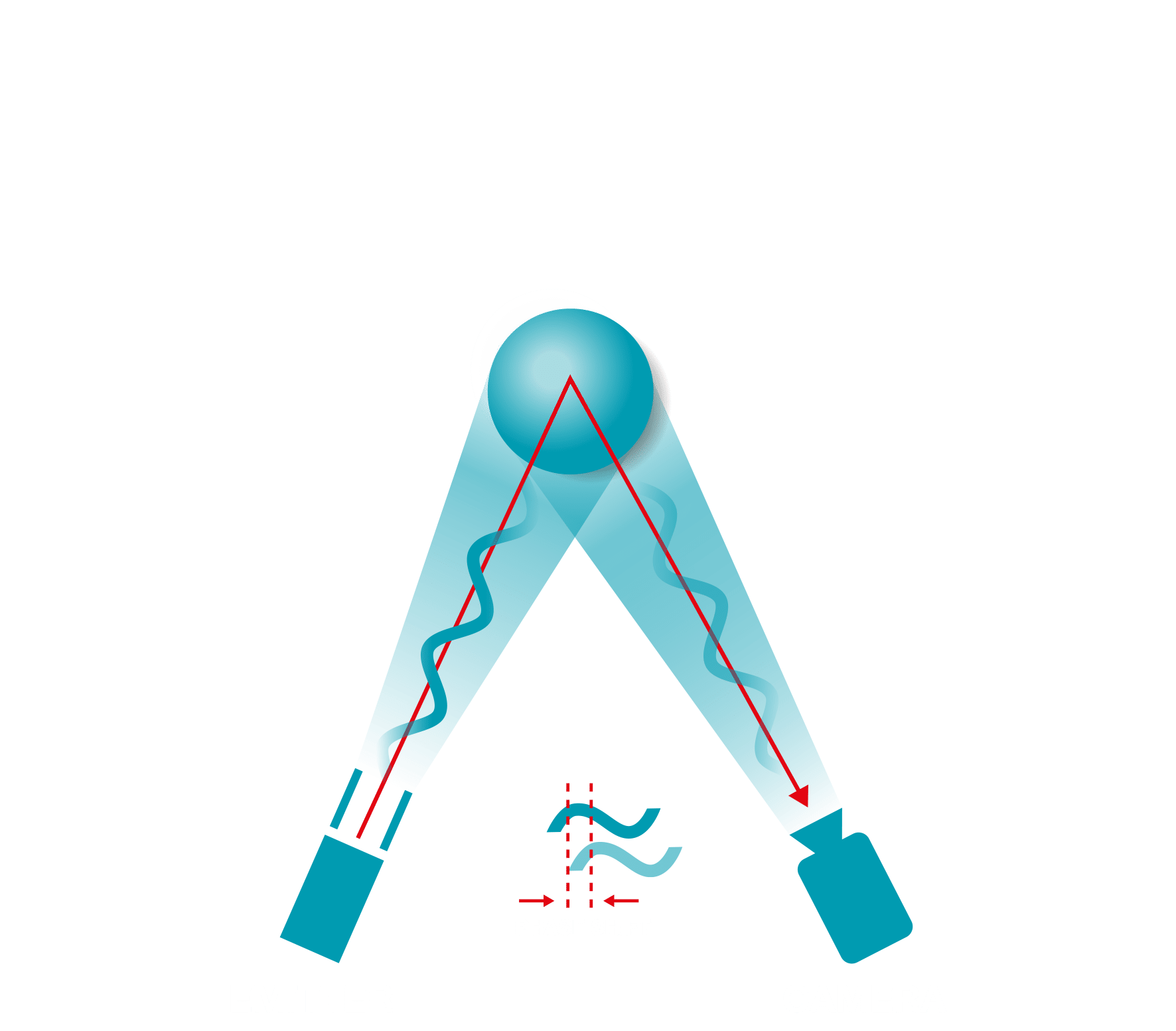

INDIRECT TIME OF FLIGHT SCANNING

Direct ToF (dToF) refers to emitting a single pulse and calculating the distance based on the time difference between the emitted pulse and the received reflection. While indirect ToF (iToF) uses a continuous modulated/ coded stream of light. The distance is then calculated through the difference in the phase between emitted and received reflected light. dToF is most implemented in scanning based LiDARs (see illustration ToF Principle). While iToF is the main principle for flash-based cameras (see illustration iToF Camera).

iToF comes with the benefit of higher accuracy without the need for extreme sampling rates of the laser light pulse. Thus, allowing to capture at higher resolutions and field of views with high frame rates at reasonable cost.

ToF Flash Based Cameras

DIRECT TIME OF FLIGHT FLASH

INDIRECT TIME OF FLIGHT Flash

LiDAR

LiDAR (light detection and ranging) is usually used to describe a special scanning based ToF technology. Although the term applies to all ToF camera technologies. Common setups of such LiDAR consist of a laser source that emits the laser pulses; a scanner that deflects the light onto the scene; and a detector that picks up the reflected light. Traditionally, the scanning of the scene is realized through a mirror that mechanically directs the laser beam across the scene based on dToF. Recently, iToF solid state are gaining more traction, implementing a MEMS mirror to guide the laser.

The scanning process is repeated up to millions of times per second and produces a precise 3D point cloud of the environment. General benefits are high precision, reliability, and long ranges depending on the laser power.