Embedded vision and AI are seen as a winning duo for new applications – but developers first have to overcome a number of hurdles

Embedded vision systems are currently making their way into industrial machine vision to further advance miniaturization. They ensure that machine vision systems are getting smaller and more cost-effective by “merging” with the main system. Typical embedded vision applications are currently emerging in robotics, intralogistics and mixed reality. Due to deep integration and increasing parameter diversity, complexity is also increasing – which poses new challenges for camera developers.

Deep integration of the vision subsystem into the end product can optimize both performance and cost for a specific application. The greatest challenge here is to manage the balancing act between a specific, highly optimized vision solution on the one hand and easy accessibility, fast development cycles and extensive scalability on the other. That is why there is often no way around customized development solutions. However, parameters such as cost, size, performance and energy requirements can only be “balanced out” effectively by thoroughly analyzing the requirements and translating them into the right specifications. This often requires breaking new ground, because today technologies and architectures are used that are not known in conventional industrial machine vision.

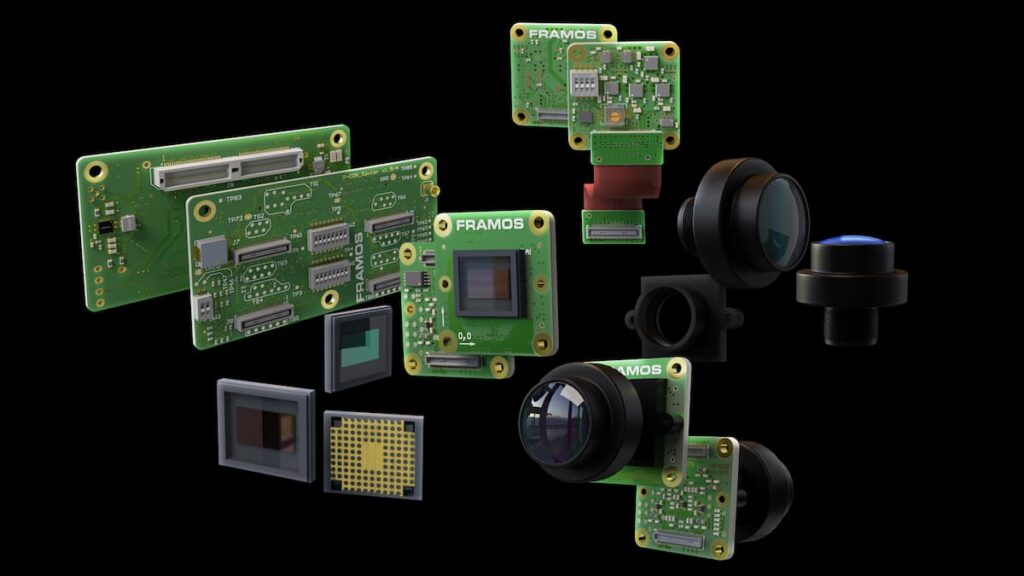

Designing embedded vision systems in a modular way

Often, the development of the embedded vision solution element and the main system takes place in parallel in order to optimally coordinate both units and achieve maximum synergy effects. Embedded vision avoids conventional architectures based on host PCs, Ethernet/USB interfaces and housed cameras. Instead, the system is divided into modules that share the available resources as much as possible, such as the power supply, the main processor or the housing. For this purpose, the individual modules, including the software, have to be coordinated with each other in the best possible way. Only then can the system efficiently transmit and process the captured images.

When artificial intelligence (AI) is applied, some special features come into play. In this case, camera data is evaluated in real time and decisions are made on the basis of this data. Current embedded architectures offer sufficient computing power for this. However, it is important to clarify in advance which data, features or objects are relevant for the application in order to optimally design the system in terms of resolution, sensitivity and data rate.

The combination of AI and embedded vision currently offers unsuspected possibilities, and the creativity of manufacturers seemingly knows no bounds. Applications ranging from delivery drones to automated sports analysis in the home to automatic feeding machines for pets are emerging. However, many developers and designers have to learn that although the necessary technology exists, it is usually highly complex and therefore cannot be successfully integrated within the intended budget and timeframe.

Seeing the overall system as one unit

The key challenge for developers is the precise, full definition of requirements right at the start of an imaging project – including the commercial framework for subsequent series production. Only then can the solution be developed and prepared in such a way that a smooth and rapid transfer to series production can be achieved. In doing so, all vision components must be seen and evaluated as one system. The sensor, lens and processor cannot simply be selected independently of each other, as they are partly mutually dependent. There are usually many adjusting screws to optimize a solution, and each adjustment has effects that need to be known.

Example: Smaller pixels today allow for a smaller sensor (with a fixed resolution), which is less expensive, but usually also has lower sensitivity and saturation performance. At the same time, users can usually choose a smaller lens, which offers another price advantage, but they need to make sure that the lens has a high resolution capability, which in turn matches the small pixel size of the sensor. In addition, the lens must have a short focal length to achieve the same angle of view that a longer focal length combined with a larger sensor would have. This affects the aperture and consequently the depth of field and the available light.

System architecture and interfaces are key

Higher pixel density means more data in the imaging pipeline. The system must be able to transmit and process this volume of data. However, the bandwidth of a vision system is limited, so a compromise must be found between resolution, pixel bit depth and frame rate. The interface to the processor system is extremely important for the transmission of all image data and consequently for data processing. It is important to ensure that there are always sufficient resources to meet the requirements of the application.

Usually, all the data from the sensors is in RAW format, so a series of mathematical transformations has to be performed to obtain an image that corresponds to what our eyes are used to. Different algorithms are implemented in different systems, and depending on the RAW data – defined by the sensor and lens characteristics – some achieve better and others worse image quality. Therefore, appropriate tests are recommended here to find out empirically how the best results can be achieved in each case.

The right system architecture offers significant potential savings in power supply, size (due to its smaller form factor), and cost. At the same time, thanks to the current technology performance, the image quality can be adjusted and optimized to the application in such a way that hardly any compromises have to be made.

Also factor in producibility in all evaluations

Even at the design stage of a vision product, it has to be determined whether it can be mass-produced and whether the functionality of the mass-produced product is guaranteed. Once the architecture is defined and the design is complete, the production processes have to be set up in such a way that consistent quality is achieved and the application runs successfully in practice under the specified conditions. Subsequent corrections must be avoided at all costs – they lead to a loss of time (longer time to market) and to follow-up costs that are difficult to calculate. So that manufacturers can concentrate fully on their core competencies and developers of embedded vision systems can take as least risk as possible, it is advisable to work with experienced partners such as FRAMOS right from the start.

Dr. Frederik Schönebeck is Head of Custom Solutions at FRAMOS. In this role, he supports customers in designing specific solutions and taking them into series production.